一、环境介绍

名称

IP地址

角色

部署方式

crawlab-master

192.168.99.52

master节点

Docker-compose、目录:/data/crawlab、/data/mongo、/data/redis

crawlab-work01

192.168.99.53

work节点

Docker-compose、目录:/data/crawlab

crawlab-work02

192.168.99.54

work节点

Docker-compose、目录:/data/crawlab

二、开始部署Crawlab 2.0 安装docker及docker-compose 1 $ curl https://www.putianhui.cn/package/script/install_docker.sh | bash

2.1 初始化各主机环境 设置各角色主机名

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 cat > /etc/hostname <<EOF crawlab-master EOF hostnamectl set-hostname crawlab-master && bash cat > /etc/hostname <<EOF crawlab-work01 EOF hostnamectl set-hostname crawlab-work01 && bash cat > /etc/hostname <<EOF crawlab-work02 EOF hostnamectl set-hostname crawlab-work02 && bash

各节点安装docker及docker-compose

1 curl https://www.putianhui.cn/package/script/install_docker.sh | bash

2.2 部署Crawlab-master

注意:镜像中具体的环境变量信息参考这里:https://docs.crawlab.cn/zh/Config/

创建数据目录

1 mkdir /data/crawlab /data/mongo /data/redis -p

创建master节点的docker-compose.yml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 $ cd /data/crawlab $ vim docker-compose.yml version: '3.3' services: master: restart: always image: tikazyq/crawlab:latest container_name: crawlab_master network_mode: "host" environment: CRAWLAB_SERVER_MASTER: "Y" CRAWLAB_SERVER_REGISTER_TYPE: "ip" CRAWLAB_SERVER_REGISTER_IP: "192.168.99.52" CRAWLAB_MONGO_HOST: "192.168.99.52" CRAWLAB_MONGO_PORT: "27017" CRAWLAB_MONGO_DB: "crawlab_test" CRAWLAB_REDIS_ADDRESS: "192.168.99.52" CRAWLAB_REDIS_PASSWORD: "" CRAWLAB_REDIS_PORT: "6379" CRAWLAB_REDIS_DATABASE: "1" mongo: restart: always image: mongo:5.0.4 container_name: crawlab_mongo network_mode: "host" volumes: - "/data/mongo:/data/db" - "/etc/localtime:/etc/localtime" redis: restart: always image: redis:6.2 container_name: crawlab_redis network_mode: "host" volumes: - "/data/redis:/data" - "/etc/localtime:/etc/localtime"

通过docker-compase启动服务

1 2 3 4 5 6 7 8 9 10 $ pwd /data/crawlab $ ls docker-compose.yml $ docker-compose up -d $ docker-compose logs -f

服务启动成功之后可以访问master的节点http://MasterIP:8080,默认账号密码admin/admin登录到平台

默认master部署成功后对外暴露8080端口,浏览器访问http://MasterIP:8080即可。

但是有时候想通过域名来访问,我们就需要改 crawlab_master 容器的nginx配置,添加server_name即可。

修改域名访问操作如下:

在master节点操作以下步骤,如果不配置域名访问可以直接跳过至 2.3 步骤

查看master节点运行的容器

1 2 3 4 5 $ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES b175330afc71 mongo:5.0.4 "docker-entrypoint.s…" 50 minutes ago Up About an hour crawlab_mongo e93d8c03f0b6 tikazyq/crawlab:latest "/bin/bash /app/dock…" 50 minutes ago Up About an hour crawlab_master bde0f5438f86 redis:6.2 "docker-entrypoint.s…" 50 minutes ago Up About an hour crawlab_redis

将master容器的nginx下crawlab的配置文件保存至本地。

1 2 3 $ docker exec -it crawlab_master cat /etc/nginx/conf.d/crawlab.conf > crawlab.conf.bak $ ls crawlab.conf.bak

修改crawlab.conf.bak的nginx配置文件,添加域名。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 $ cp crawlab.conf.bak crawlab.conf $ vim crawlab.conf server { gzip on; gzip_min_length 1k; gzip_buffers 4 16k; gzip_comp_level 2; gzip_types text/plain application/javascript application/x-javascript text/css application/xml text/javascript application/x-httpd-php image/jpeg image/gif image/png; gzip_vary off; gzip_disable "MSIE [1-6]\." ; client_max_body_size 200m; listen 80; server_name crawlab.putianhui.cn; root /app/dist; index index.html; location /api/ { rewrite /api/(.*) /$1 break ; proxy_pass http://localhost:8000/; } }

将修改后的配置文件复制到master容器内,并进入容器重新nginx reload一下

1 2 3 4 5 6 7 8 $ docker cp ./crawlab.conf crawlab_master:/etc/nginx/conf.d/crawlab.conf $ docker exec -it crawlab_master nginx -t $ docker exec -it crawlab_master nginx -s reload

本地客户端配置添加hosts文件或者设置dns域名解析后,使用浏览器访问你添加的域名即可访问到crawlab。

2.3 部署Crawlab-node01 创建数据目录

创建work01节点的docker-compose.yml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ cd /data/crawlab $ vim docker-compose.yml version: '3.3' services: master: restart: always image: tikazyq/crawlab:latest container_name: crawlab_node01 network_mode: "host" environment: CRAWLAB_SERVER_MASTER: "N" CRAWLAB_SERVER_REGISTER_TYPE: "ip" CRAWLAB_SERVER_REGISTER_IP: "192.168.99.53" CRAWLAB_MONGO_HOST: "192.168.99.52" CRAWLAB_MONGO_PORT: "27017" CRAWLAB_MONGO_DB: "crawlab_test" CRAWLAB_REDIS_ADDRESS: "192.168.99.52" CRAWLAB_REDIS_PASSWORD: "" CRAWLAB_REDIS_PORT: "6379" CRAWLAB_REDIS_DATABASE: "1"

通过docker-compase启动服务

1 2 3 4 5 6 7 8 9 10 $ pwd /data/crawlab $ ls docker-compose.yml $ docker-compose up -d $ docker-compose logs -f

2.4 部署Crawlab-node02 创建数据目录

创建work02节点的docker-compose.yml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ cd /data/crawlab $ vim docker-compose.yml version: '3.3' services: master: restart: always image: tikazyq/crawlab:latest container_name: crawlab_node02 network_mode: "host" environment: CRAWLAB_SERVER_MASTER: "N" CRAWLAB_SERVER_REGISTER_TYPE: "ip" CRAWLAB_SERVER_REGISTER_IP: "192.168.99.54" CRAWLAB_MONGO_HOST: "192.168.99.52" CRAWLAB_MONGO_PORT: "27017" CRAWLAB_MONGO_DB: "crawlab_test" CRAWLAB_REDIS_ADDRESS: "192.168.99.52" CRAWLAB_REDIS_PASSWORD: "" CRAWLAB_REDIS_PORT: "6379" CRAWLAB_REDIS_DATABASE: "1"

通过docker-compase启动服务

1 2 3 4 5 6 7 8 9 10 $ pwd /data/crawlab $ ls docker-compose.yml $ docker-compose up -d $ docker-compose logs -f

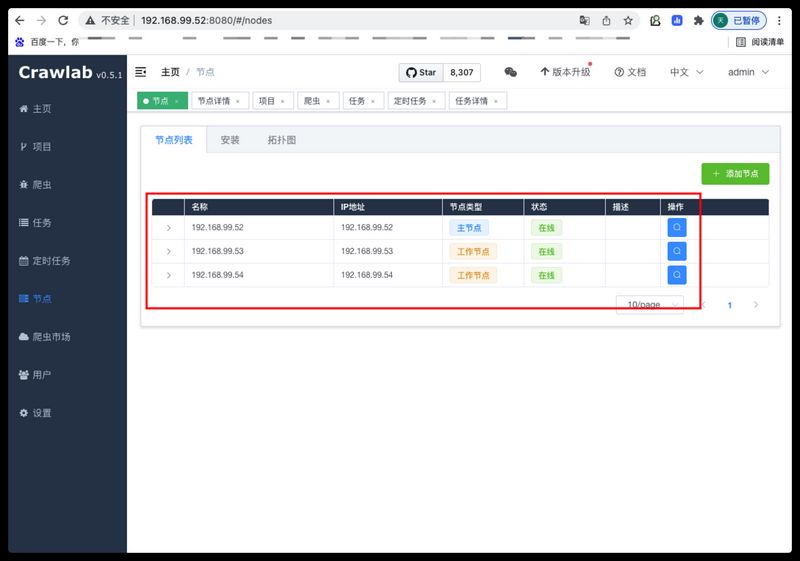

2.5 验证部署 浏览器访问http://MasterIP:8080,默认账号密码admin/admin登录到平台。

当master及work节点都启动成功,可以在平台节点处看到信息及代表部署成功。