文档中所有helm服务的部署都Kubernetes-1.24.6集群中进行的,低版本或者高版本部分api可能不支持,请自行研究。

Helm的常用命令 查看helm的版本信息

repo仓库相关

1 2 3 4 $ helm repo list $ helm repo add jumpserver https://jumpserver.github.io/helm-charts $ helm repo update $ helm repo remove jumpserver

search搜索相关

1 $ helm search repo gitlab

show查看相关

1 2 3 4 5 6 7 8 9 10 11 $ helm show all $ helm show chart gitlab/gitlab $ helm show readme $ helm show values gitlab/gitlab

install安装相关

1 2 3 4 5 6 7 8 $ helm install ingress-nginx --version 4.4.3 -f ./ingress-nginx-values.yaml ingress-nginx/ingress-nginx $ helm install ingress-nginx --version 4.4.3 --set nfs.server=192.168.1.43 ingress-nginx/ingress-nginx $ helm install ingress-nginx ingress-nginx/

安装nfs-client-provisioner 安装nfs文件共享服务

1 2 3 4 5 6 7 8 $ yum install -y nfs-utils $ mkdir /data/volumes/nfs $ vim /etc/exports /data/volumes/nfs 192.168.1.0/24(rw,no_root_squash) $ systemctl restart nfs $ showmount -e 192.168.1.43 Export list for 192.168.1.43: /data/volumes/nfs 192.168.1.0/24

k8s集群中的所有Node安装nfs-utils工具。

1 $ yum install -y nfs-utils

通过helm安装nfs-client-provisioner

1 2 3 4 5 6 7 8 9 $ helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/ $ helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner --version 4.0.17 \ --set nfs.server=192.168.1.43 \ --set nfs.path=/data/volumes/nfs \ --set image.repository=putianhui/nfs-subdir-external-provisioner \ --set image.tag=v4.0.2 \ --set storageClass.create=true \ --set storageClass.name=nfs-sc \ --set storageClass.reclaimPolicy=Retain

nfs.server:你的nfs服务器ip地址nfs.path:nfs服务器共享的目录image.repository:由于默认的仓库地址是k8s官方的谷歌仓库需要科学上网,我这里把镜像pull下来上传到了我自己的仓库下。image.tag:镜像的版本storageClass.create:自动创建一个存储类storageClass.name:自动创建的存储类名称storageClass.reclaimPolicy:自动创建存储类的回收规则,默认是delete

查看helm安装后nfs-provisioner的pod和sc是否正常启动并创建

1 2 3 4 5 6 7 $ kubectl get pod NAME READY STATUS RESTARTS AGE nfs-subdir-external-provisioner-cbc4c9bcb-5mrbc 1/1 Running 0 3m22s $ kubectl get sc NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE nfs-sc cluster.local/nfs-subdir-external-provisioner Retain Immediate true 3m30s

创建deploy通过存储类自动创建pvc并挂载验证是否可用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 $ vim test-sc-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: test-nfs-mount-pvc spec: storageClassName: "nfs-sc" accessModes: - ReadWriteOnce resources: requests: storage: 20Gi --- apiVersion: apps/v1 kind: Deployment metadata: name: test-nfs-mount-pvc-deploy spec: selector: matchLabels: app: test-nfs-mount strategy: type: Recreate template: metadata: labels: app: test-nfs-mount spec: containers: - image: centos:7 name: centos volumeMounts: - name: test-nfs-pvc mountPath: /data/ command: ["/bin/bash" ,"-c" ,"sleep 9999999" ] volumes: - name: test-nfs-pvc persistentVolumeClaim: claimName: test-nfs-mount-pvc

应用测试用的nfs-deploy文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 $ kubectl apply -f test-sc-pvc.yaml persistentvolumeclaim/test-nfs-mount-pvc created deployment.apps/test-nfs-mount-pvc-deploy created $ kubectl get pvc test-nfs-mount-pvc Bound pvc-5e717506-9ddf-4d69-8932-515844789c9c 20Gi RWO nfs-sc 80s $ kubectl get pod test-nfs-mount-pvc-deploy-7565c5586b-lf7tn 1/1 Running 0 109s

Helm3安装ingress-nginx 安装并验证ingress 通过helm安装ingress-nginx

1 2 $ helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx $ helm repo update

新建一个values.yml文件,修改默认values.yml文件中的部分配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 $ vim ingress-nginx-values.yaml controller: image: chroot: false registry: docker.io image: putianhui/controller tag: 'v1.6.1' digest: '' digestChroot: '' pullPolicy: IfNotPresent hostNetwork: true dnsPolicy: ClusterFirstWithHostNet nodeSelector: kubernetes.io/os: linux ingress: 'true' kind: DaemonSet ingressClassResource: enabled: true default: true admissionWebhooks: patch: enabled: true image: registry: docker.io image: putianhui/kube-webhook-certgen tag: v20220916-gd32f8c343 digest: ""

为节点打上ingress可调度的标签

1 $ kubectl label nodes lingang-k8s03-43 ingress=true

通过helm应用安装ingress-nginx到集群中

1 2 3 4 $ helm install ingress-nginx-controller --version 4.4.3 -f ./ingress-nginx-values.yaml ingress-nginx/ingress-nginx

创建ingress规则及deployment资源,测试ingress是否生效

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 $ vim test-ingress.yaml apiVersion: apps/v1 kind: Deployment metadata: name: ingress-svc-demo spec: replicas: 2 selector: matchLabels: app: myapp template: metadata: labels: app: myapp spec: containers: - image: putianhui/myapp:v1 name: ingress-svc-demo ports: - containerPort: 80 --- apiVersion: v1 kind: Service metadata: name: ingress-svc-demo spec: selector: app: myapp ports: - targetPort: 80 port: 80 --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: demo-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx rules: - host: "demo.mrpu.cn" http: paths: - path: / pathType: Prefix backend: service: name: ingress-svc-demo port: number: 80

应用清单并访问ingress的域名验证

1 2 3 4 5 6 7 8 9 $ kubectl apply -f test-ingress.yaml $ curl demo.mrpu.cn/hostname.html ingress-svc-demo-58d45b676b-t6d9k $ curl demo.mrpu.cn/hostname.html ingress-svc-demo-58d45b676b-xcgkg

配置https访问ingress 首先将对应域名的https证书公钥和私钥base64编码一下,拿到编码后的内容

1 2 3 4 5 $ echo -n "tls.crt公钥内容" |base64 $ echo -n "tls.key私钥内容" |base64

新建一个secret存储https证书编码后的公私钥。

1 2 3 4 5 6 7 8 9 10 $ vim test-ingress-tls-secret.yaml apiVersion: v1 kind: Secret metadata: name: demo-mrpu-cn-tls namespace: default data: tls.crt: tls.key: type: kubernetes.io/tls

应用并创建证书secret

1 $ kubectl apply -f test-ingress-tls-secret.yaml

修改验证ingress中的部署清单,添加https证书的配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 $ vim test-ingress.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: demo-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx tls: - hosts: - demo.mrpu.cn secretName: demo-mrpu-cn-tls rules: - host: "demo.mrpu.cn" http: paths: - path: / pathType: Prefix backend: service: name: ingress-svc-demo port: number: 80

重新应用修改后的ingress配置清单

1 $ kubectl apply -f test-ingress.yaml

应用成功之后重新访问ingress域名即可成功访问https站点。

配置ingress访问日志分析 效果图

创建ingress-nginx日志持久化的pvc

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 $ vim ingress-nginx-log-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: ingress-nginx-accesslog spec: accessModes: - ReadWriteMany resources: requests: storage: 50Gi storageClassName: nfs-sc $ kubectl apply -f ingress-nginx-log-pvc.yaml

修改ingress-nginx的helm部署配置清单ingress-nginx-values.yaml,添加ingress-nginx的访问日志文件持久化、访问日志的json格式化。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 controller: ... 上面内容和部署配置一致省略.... extraInitContainers: - command: - chown - -R - 101 :101 - /var/log/nginx image: alpine:3 name: nginx-data-dir-ownership volumeMounts: - mountPath: /var/log/nginx name: ingress-nginx-accesslog-pvc securityContext: runAsUser: 0 extraVolumeMounts: - name: ingress-nginx-accesslog-pvc mountPath: '/var/log/nginx' extraVolumes: - name: ingress-nginx-accesslog-pvc persistentVolumeClaim: claimName: ingress-nginx-accesslog config: http-access-log-path: '/var/log/nginx/httpAccess.log' log-format-upstream: '{"@timestamp":"$time_iso8601", "@source":"$server_addr", "hostname":"$hostname", "ip":"$remote_addr", "client":"$remote_addr", "request_method":"$request_method", "scheme":"$scheme", "domain":"$server_name", "referer":"$http_referer", "request":"$request_uri", "args":"$args", "size":$body_bytes_sent, "status": $status, "responsetime":$request_time, "upstreamtime":"$upstream_response_time", "upstreamaddr":"$upstream_addr", "http_user_agent":"$http_user_agent", "ns":"$namespace", "ingress_name":"$ingress_name", "service_name":"$service_name", "service_port":"$service_port", "server_port": "$server_port", "https":"$https"}'

如果你是手动下载ingress-nginx部署清单部署的控制器,请使用下面的方法手动修改configMap配置来设置访问日志的格式化。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 $ kubectl get cm ingress-nginx-controller-controller -oyaml apiVersion: v1 data: log-format-upstream: '{"@timestamp":"$time_iso8601", "@source":"$server_addr", "hostname":"$hostname", "ip":"$remote_addr", "client":"$remote_addr", "request_method":"$request_method", "scheme":"$scheme", "domain":"$server_name", "referer":"$http_referer", "request":"$request_uri", "args":"$args", "size":$body_bytes_sent, "status": $status, "responsetime":$request_time, "upstreamtime":"$upstream_response_time", "upstreamaddr":"$upstream_addr", "http_user_agent":"$http_user_agent", "ns":"$namespace", "ingress_name":"$ingress_name", "service_name":"$service_name", "service_port":"$service_port", "server_port": "$server_port", "https":"$https"}' ...... 后面配置省略.......

部署临时测试用的es,你已经有存在的es可以忽略此步

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 $ vim es681-deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: name: elasticsearch spec: selector: matchLabels: app: elasticsearch replicas: 1 template: metadata: labels: app: elasticsearch spec: containers: - name: elasticsearch image: elasticsearch:6.8.1 ports: - containerPort: 9200 name: http - containerPort: 9300 name: transport volumeMounts: - name: data mountPath: /usr/share/elasticsearch/data volumes: - name: data persistentVolumeClaim: claimName: elasticsearch-data --- apiVersion: v1 kind: Service metadata: name: elasticsearch spec: selector: app: elasticsearch ports: - name: http port: 9200 targetPort: 9200 nodePort: 31920 - name: transport port: 9300 targetPort: 9300 nodePort: 31930 type : NodePort --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: elasticsearch-data spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: nfs-sc $ kubectl apply -f es681-deploy.yaml

部署logstash挂载ingress-nginx持久化日志的pvc,然后收集访问日志格式化后存储到es中

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 $ vim ingress-nginx-log-logstash.yaml apiVersion: apps/v1 kind: Deployment metadata: name: logstash spec: replicas: 1 selector: matchLabels: app: logstash template: metadata: labels: app: logstash spec: containers: - name: logstash image: logstash:6.8.1 command : ["/bin/sh" , "-c" , "logstash -f /usr/share/logstash/pipeline/logstash.conf" ] volumeMounts: - name: ingress-nginx-accesslog mountPath: /var/log/nginx/ - name: logstash-config-volume mountPath: /usr/share/logstash/pipeline/ volumes: - name: ingress-nginx-accesslog persistentVolumeClaim: claimName: ingress-nginx-accesslog - name: logstash-config-volume configMap: name: logstash-config items: - key: logstash.conf path: logstash.conf --- apiVersion: v1 kind: ConfigMap metadata: name: logstash-config data: logstash.conf: | input { file { path => [ "/var/log/nginx/*.log" ] ignore_older => 0 codec => json } } filter { mutate { convert => [ "status" ,"integer" ] convert => [ "size" ,"integer" ] convert => [ "upstreatime" ,"float" ] remove_field => "message" } geoip { source => "ip" } if [user_ua] != "-" { useragent { target => "agent" source => "http_user_agent" } } } output { elasticsearch { hosts => "192.168.1.41:31920" index => "logstash-nginx-access-%{+YYYY.MM.dd}" } }

临时部署grafana测试,你已有grafana请忽略此步骤

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 $ vim grafana-deploy.yaml apiVersion: v1 kind: Service metadata: name: grafana-nginx-log labels: k8s-app: grafana spec: type : NodePort ports: - name: http port: 3000 targetPort: 3000 nodePort: 30088 selector: k8s-app: grafana --- apiVersion: apps/v1 kind: Deployment metadata: name: grafana labels: k8s-app: grafana spec: selector: matchLabels: k8s-app: grafana template: metadata: labels: k8s-app: grafana spec: initContainers: - name: init-file image: busybox:1.28 imagePullPolicy: IfNotPresent command : ['chown' , '-R' , '472:0' , '/var/lib/grafana' ] volumeMounts: - name: data mountPath: /var/lib/grafana subPath: grafana containers: - name: grafana image: grafana/grafana:8.5.24 imagePullPolicy: IfNotPresent ports: - name: http containerPort: 3000 env : - name: GF_SECURITY_ADMIN_USER value: 'admin' - name: GF_SECURITY_ADMIN_PASSWORD value: 'admin' readinessProbe: failureThreshold: 10 httpGet: path: /api/health port: 3000 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 30 livenessProbe: failureThreshold: 10 httpGet: path: /api/health port: 3000 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 volumeMounts: - name: data mountPath: /var/lib/grafana subPath: grafana volumes: - name: data persistentVolumeClaim: claimName: grafana-pvc --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: grafana-pvc spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: nfs-sc

登录grafana添加Elasticsearch数据源,注意数据源的名称为nginx-es,如果不是这个可能导入仪表盘无法找到数据源(你可以改仪表盘json来搞)

导入grafana仪表盘ID2292打开仪表盘即可出图,也可以使用下面我修改后的仪表盘json导入。

下载我修改好的仪表盘json,导入到grafana即可出图

1 https://www.putianhui.cn/package/script/grafana/ingress-nginx-logs-grafana.json

Helm3安装jumpserver

安装前依赖mysql5.7、redis6.0、存储类、ingress控制器、对应域名证书的secret在执行安装前需要提前安装好,文档中不提及安装事宜,直接使用。

通过helm安装Jumpserver

1 2 $ helm repo add jumpserver https://jumpserver.github.io/helm-charts $ helm repo update

新建jumpserver的values清单

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 $ vim jms-values.yaml global: storageClass: 'nfs-sc' externalDatabase: engine: mysql host: 192.168.1.43 port: 3306 user: root password: 'putianhui' database: jumpserver externalRedis: host: 192.168.1.43 port: 6379 password: 'putianhui' ingress: enabled: true annotations: kubernetes.io/ingress.class: nginx nginx.ingress.kubernetes.io/proxy-body-size: '4096m' nginx.ingress.kubernetes.io/configuration-snippet: | proxy_set_header Upgrade "websocket" ; proxy_set_header Connection "Upgrade" ; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for ; hosts: - 'jms.mrpu.cn' tls: - secretName: demo-mrpu-cn-tls hosts: - jms.mrpu.cn core: enabled: true config: secretKey: 'GDPzrwvPD2uc0VKvTkfsruQgMNonhyHBH0JAqadlocgt3ooaQC' bootstrapToken: 'TUZQD80hlrcpjE8fGcW9B788' persistence: storageClassName: nfs-sc accessModes: - ReadWriteMany size: 200Gi koko: enabled: true persistence: storageClassName: nfs-sc accessModes: - ReadWriteMany size: 50Gi lion: enabled: true persistence: storageClassName: nfs-sc accessModes: - ReadWriteMany size: 50Gi magnus: enabled: true persistence: storageClassName: nfs-sc accessModes: - ReadWriteMany size: 30Gi omnidb: persistence: storageClassName: nfs-sc accessModes: - ReadWriteMany size: 10Gi razor: persistence: storageClassName: nfs-sc accessModes: - ReadWriteMany size: 50Gi web: enabled: true persistence: storageClassName: nfs-sc accessModes: - ReadWriteMany size: 10Gi

helm通过jms自定义values清单创建服务

1 $ helm install jumpserver --version 2.28.6 -f ./jms-values.yaml jumpserver/jumpserver

部署成功后浏览器访问前面的ingress域名,默认账号密码是admin/admin

Helm3安装Harbor

安装前依赖``存储类、ingress控制器、对应域名证书的secret`在执行安装前需要提前安装好,文档中不提及安装事宜,直接使用。

安装Harbor 通过helm安装Harbor 仓库

1 2 $ helm repo add harbor https://helm.goharbor.io $ helm repo update

新建一个values.yml文件,修改默认values.yml文件中的部分配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 expose: type: ingress tls: enabled: true certSource: secret secret: secretName: 'mrpu-cn-tls' notarySecretName: 'mrpu-cn-tls' ingress: hosts: core: harbor.mrpu.cn notary: notary-harbor.mrpu.cn controller: default className: 'nginx' annotations: ingress.kubernetes.io/ssl-redirect: 'true' ingress.kubernetes.io/proxy-body-size: '0' nginx.ingress.kubernetes.io/ssl-redirect: 'true' nginx.ingress.kubernetes.io/proxy-body-size: '0' externalURL: https://harbor.mrpu.cn ipFamily: ipv6: enabled: false persistence: enabled: true resourcePolicy: 'keep' persistentVolumeClaim: registry: storageClass: 'nfs-sc' accessMode: ReadWriteOnce size: 100Gi chartmuseum: storageClass: 'nfs-sc' accessMode: ReadWriteOnce size: 50Gi jobservice: jobLog: storageClass: 'nfs-sc' accessMode: ReadWriteOnce size: 10Gi scanDataExports: storageClass: 'nfs-sc' accessMode: ReadWriteOnce size: 10Gi database: storageClass: 'nfs-sc' accessMode: ReadWriteOnce size: 100Gi redis: storageClass: 'nfs-sc' accessMode: ReadWriteOnce size: 50Gi trivy: storageClass: 'nfs-sc' accessMode: ReadWriteOnce size: 50Gi imageChartStorage: disableredirect: false type: filesystem filesystem: rootdirectory: /storage harborAdminPassword: 'Harbor12345' metrics: enabled: true

helm通过Harbor自定义values清单创建服务到集群

1 $ helm install harbor --version 1.11.0 -f ./harbor-values.yaml harbor/harbor

清单应用到集群等pod都启动成功后,就可以通过externalURL域名访问到harbor了(前提是需要先将域名解析到ingress的控制器节点ip),账号密码:admin/清单中定义的harborAdminPassword

验证推拉容器镜像 使用docker尝试登录刚刚创建的harbor仓库,然后推送一个镜像尝试。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 $ docker login https://harbor.mrpu.cn Username: admin Password: harbor的密码 Login Succeeded $ docker pull putianhui/myapp:v1 $ docker tag putianhui/myapp:v1 harbor.mrpu.cn/library/myapp:v1 $ docker push harbor.mrpu.cn/library/myapp:v1

将本地的镜像删除,然后重新去harbor服务器去拉取镜像尝试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE harbor.mrpu.cn/library/myapp v1 d4a5e0eaa84f 4 years ago 15.5MB $ docker rmi harbor.mrpu.cn/library/myapp:v1 $ docker images REPOSITORY TAG IMAGE ID CREATED SIZE $ docker pull harbor.mrpu.cn/library/myapp:v1

验证推拉helm的chart包 先本地安装helm的push插件

1 2 3 $ yum install -y git $ helm plugin install https://github.com/chartmuseum/helm-push

Helm添加Harbor默认的chart仓库

1 2 3 4 5 6 7 $ helm repo add myrepo --username=admin --password=Harbor12345 https://harbor.mrpu.cn/chartrepo $ helm repo list NAME URL myrepo https://harbor.mrpu.cn/chartrepo

helm本地创建demo的chart项目并推送到Harbor的chart仓库

1 2 3 4 5 6 7 8 9 10 11 $ helm create mydemo $ helm cm-push --username=admin --password=Harbor12345 mydemo myrepo $ helm repo update $ helm search repo demo NAME CHART VERSION APP VERSION DESCRIPTION myrepo/library/mydemo 0.1.0 1.16.0 A Helm chart for Kubernetes

也可以在Harbor平台里面看到刚刚推送的项目,项目—library—Helm Charts—mydemo

Helm3安装Jenkins 安装Jenkins 添加Jenkins 的helm仓库并更新,官方chart文档

1 2 $ helm repo add jenkinsci https://charts.jenkins.io $ helm repo update

新建Jenkins的values.yaml清单

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 $ vim jenkins-values.yaml controller: adminUser: 'admin' adminPassword: 'putianhui' ingress: enabled: false installPlugins: - kubernetes:3845.va_9823979a_744 - workflow-aggregator:590.v6a_d052e5a_a_b_5 - git:4.13.0 - git-parameter:0.9.18 - maven-plugin:3.20 - config-file-provider:3.11 - extended-choice-parameter:359.v35dcfdd0c20d - description-setter:1.10 - build-name-setter:2.2.0 - configuration-as-code:1569.vb_72405b_80249 - localization-zh-cn:1.0.24 - gitlab-plugin:1.7.4 agent: enabled: true jenkinsUrl: http://jenkins.default.svc.cluster.local:8080 jenkinsTunnel: jenkins-agent.default.svc.cluster.local:50000 namespace: default persistence: enabled: true storageClass: 'nfs-sc' accessMode: 'ReadWriteOnce' size: '100Gi'

应用并安装

1 $ helm install jenkins --version 4.3.0 -f ./jenkins-values.yaml jenkinsci/jenkins

上面把ingress关闭了,因为这个版本的chart包在v1.24.6集群上面创建ingress会出错,所以不让helm创建,我们服务跑起来之后手动创建ingress即可

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 $ vim jenkins-ingress.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: jenkins-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx rules: - host: jenkins.mrpu.cn http: paths: - backend: service: name: jenkins port: number: 8080 path: / pathType: Prefix tls: - hosts: - jenkins.mrpu.cn secretName: mrpu-cn-tls

应用ingress清单并创建ingress

1 $ kubectl apply -f ./jenkins-ingress.yaml

当helm安装的pod都成功启动之后,就可以用上面的ingress域名jenkins.mrpu.cn去访问到Jenkins了,账号密码为jenkins-values.yaml文件中设置的admin/putianhui

安装成功后需要做一下配置:

1、系统管理—全局安全管理—Host Key Verification Strategy—改为No Verification

2、系统管理—全局安全管理—代理—指定端口50000

配置Kubernetes-cloud 登录Kubernetes集群中的一台节点,通过~/.kube/config文件来获取集群的ca证书以及客户端证书

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 cd ~/.kubecad=`cat ./config |grep certificate-authority-data|awk -F : '{print $2}' ` ccd=`cat ./config |grep client-certificate-data|awk -F : '{print $2}' ` ckd=`cat ./config |grep client-key-data|awk -F : '{print $2}' ` echo $cad | base64 -d > ./ca.crtecho $ccd | base64 -d > ./client.crtecho $ckd | base64 -d > ./client.keycat ./ca.crt-----BEGIN CERTIFICATE----- ...此处省略一万字... 6no= -----END CERTIFICATE-----

将上面获取的客户端证书转换成Jenkins链接k8s使用的p12证书文件

1 2 3 $ openssl pkcs12 -export -out ./client.pfx -inkey ./client.key -in ./client.crt -certfile ./ca.crt $ ls cache ca.crt client.crt client.key client.pfx config

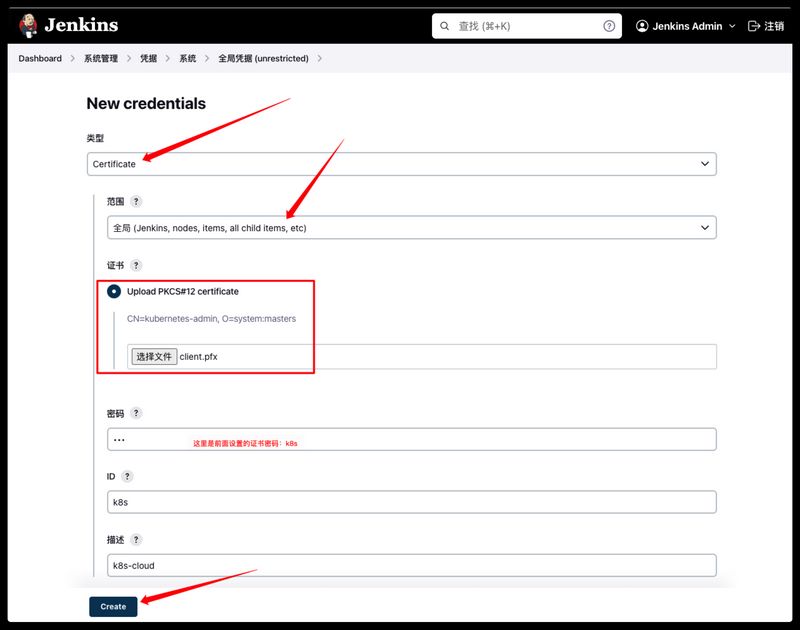

将前面一步生成的client.pfxp12格式证书添加到Jenkins的凭据中,系统管理–Manage Credentials–System–全局凭据–Add Credentials

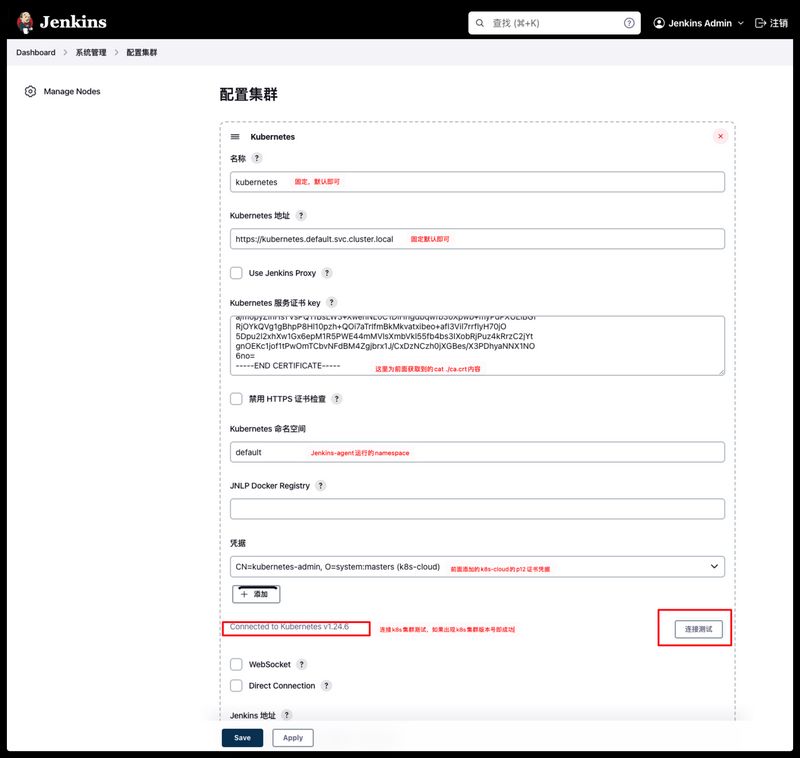

添加Kubernetes集群,系统管理–节点管理–Configure Clouds–Kubernetes Cloud Details

注意:如果在连接测试时提示如下错误信息,请升级Kubernetes的插件版本到最新。

No httpclient implementations found on the context classloader, please ensure your classpath includes an implementation jar

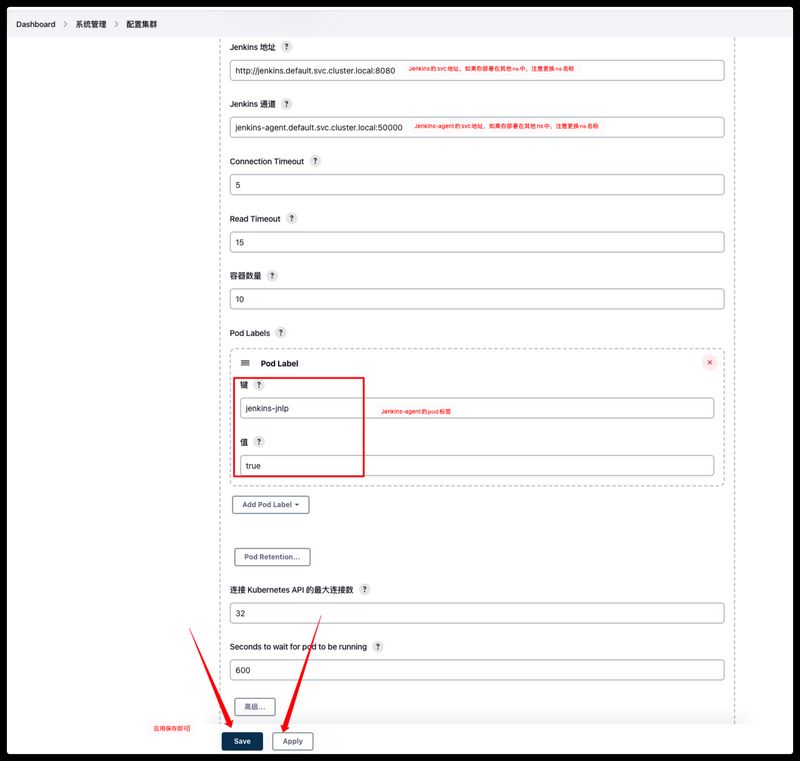

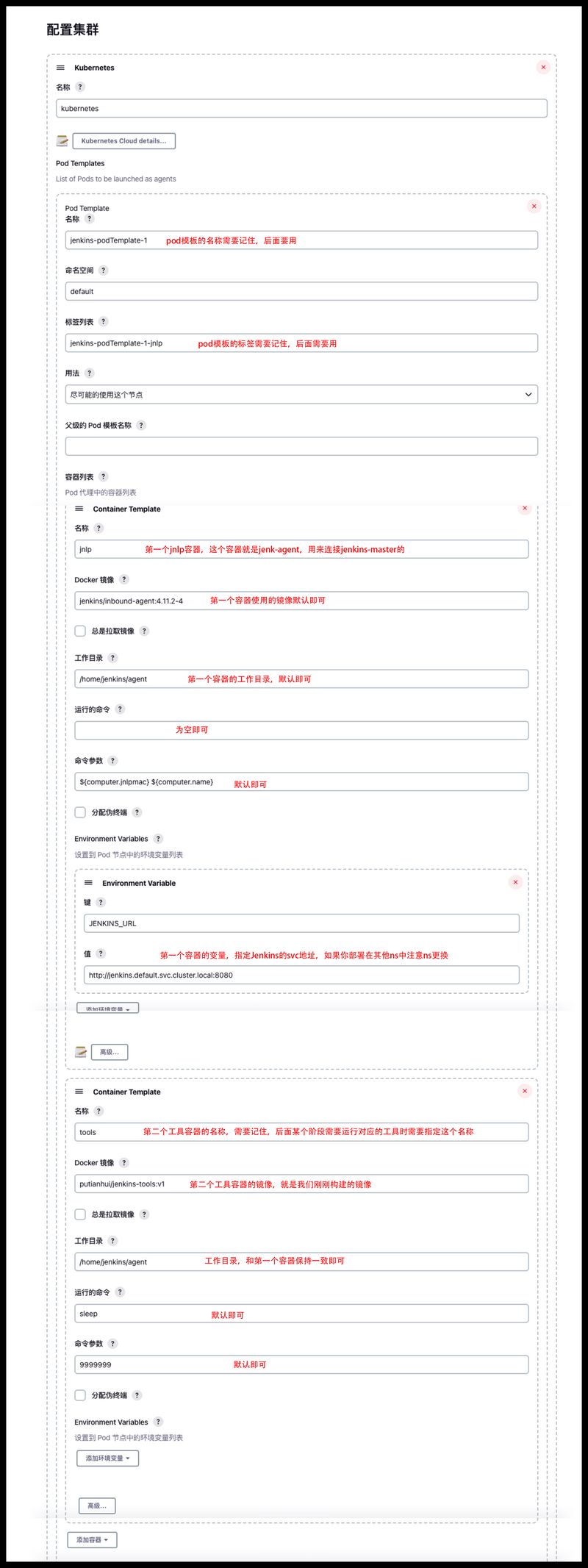

添加Jenkins-agent的配置信息

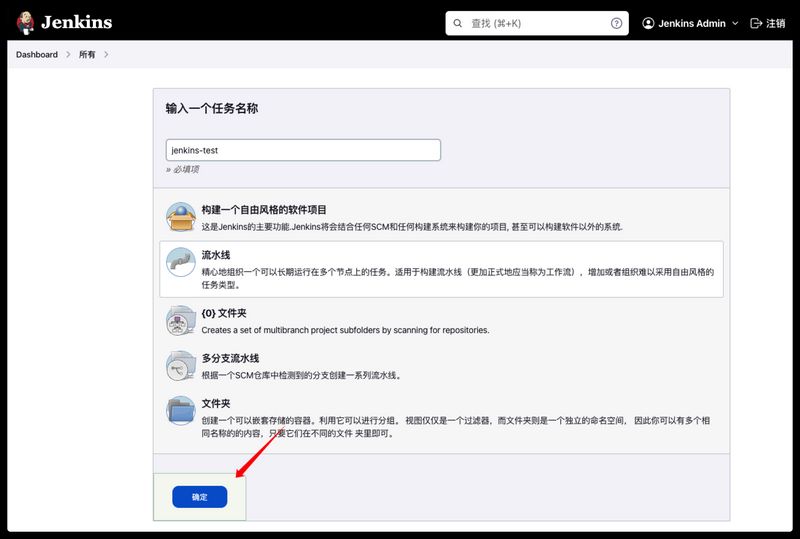

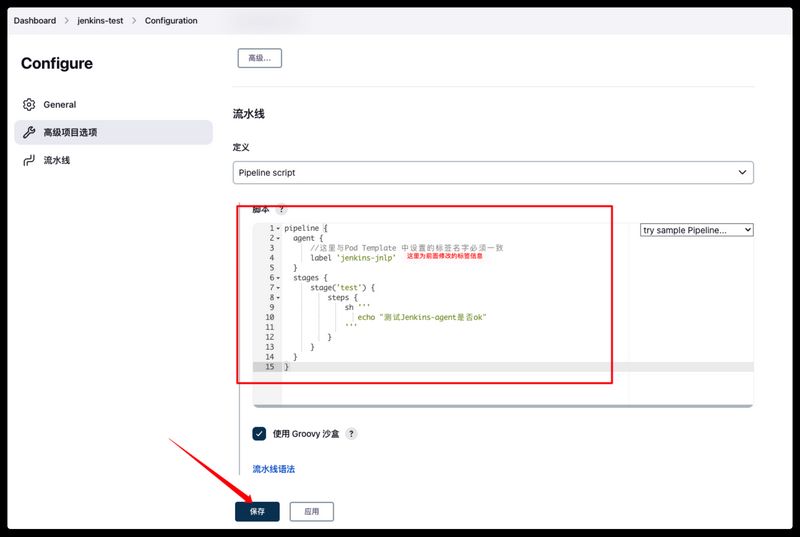

验证Jenkins-agent 新建一个测试用的Pipeline任务,验证Jenkins-slave是否ok,新建任务–名称随意–流水线–确定

添加如何Pipeline脚本,指定使用从节点去运行,这里的从节点为Kubernetes集群中的pod,也就是前面配置的标签jenkins-jnlp

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 pipeline { agent { kubernetes { // cloud中pod template配置的agent标签 label "jenkins-jnlp" } } stages { stage('2.Maven代码编译' ) { steps { sh '' ' echo "测试Jenkins-agent是否ok" ' '' } } } }

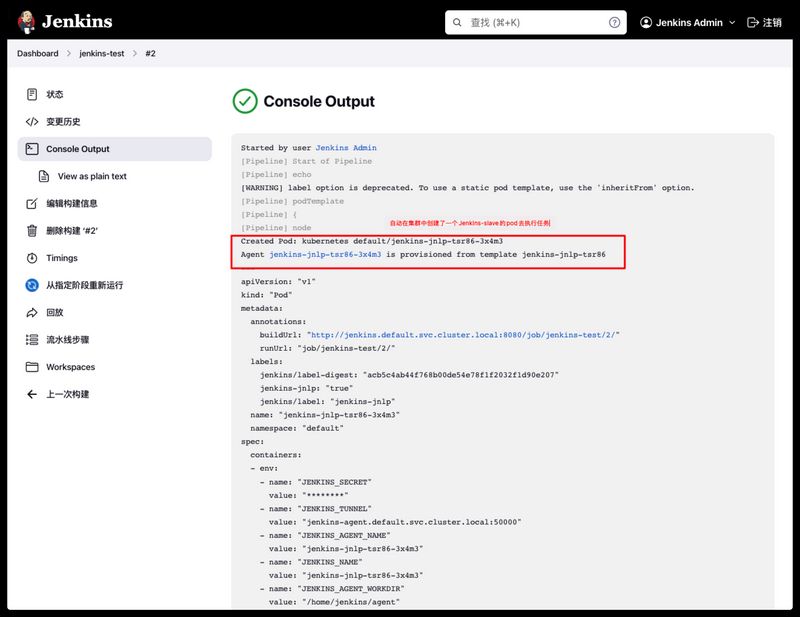

点击立即构建,让任务跑一下看看是否运行成功,运行成功后看到以下日志即ok

常用的Pipeline模板 讲Pod template参考的文档

讲Jenkins-模板使用继承实现多容器多环境的构建文档

常用的Jenkins-slave的流水线Pipeline模板文档

声明式模板 方式一:将Pod Template模板定义在Jenkins的Pipeline中

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 pipeline { agent { kubernetes { cloud 'kubernetes' namespace 'default' workingDir '/home/jenkins/agent' inheritFrom 'jenkins-slave' defaultContainer 'jnlp' yaml """\ apiVersion: kind: Pod metadata: labels: jenkins-jnlp: "true" jenkins/label: "jenkins-slave" spec: containers: - image: "jenkins/inbound-agent:4.11.2-4" imagePullPolicy: "IfNotPresent" name: "jnlp" securityContext: privileged: true tty: true volumeMounts: - mountPath: "/tmp" name: "volume-0" readOnly: false - mountPath: "/home/jenkins/agent" name: "workspace-volume" readOnly: false - image: "putianhui/myapp:v1" imagePullPolicy: "IfNotPresent" name: "myapp" resources: {} securityContext: privileged: true tty: true volumeMounts: - mountPath: "/tmp" name: "volume-0" readOnly: false - mountPath: "/home/jenkins/agent" name: "workspace-volume" readOnly: false restartPolicy: "Never" volumes: - name: "volume-0" persistentVolumeClaim: claimName: "jumpserver-jms-web-logs" readOnly: false - emptyDir: medium: "" name: "workspace-volume" """ .stripIndent() } } stages { stage ('git-checkout' ) { steps { echo "declarative Pipeline - kubernetes" container('jnlp' ) { sh """ echo "git checkout" touch git.txt df -hT """ } } } stage ('mvn编译' ) { steps { container('myapp' ) { sh """ ls -l """ } } } stage ('restart-Myapp' ) { steps { container('myapp' ) { sh """ echo "myapp" df -hT """ } } } } }

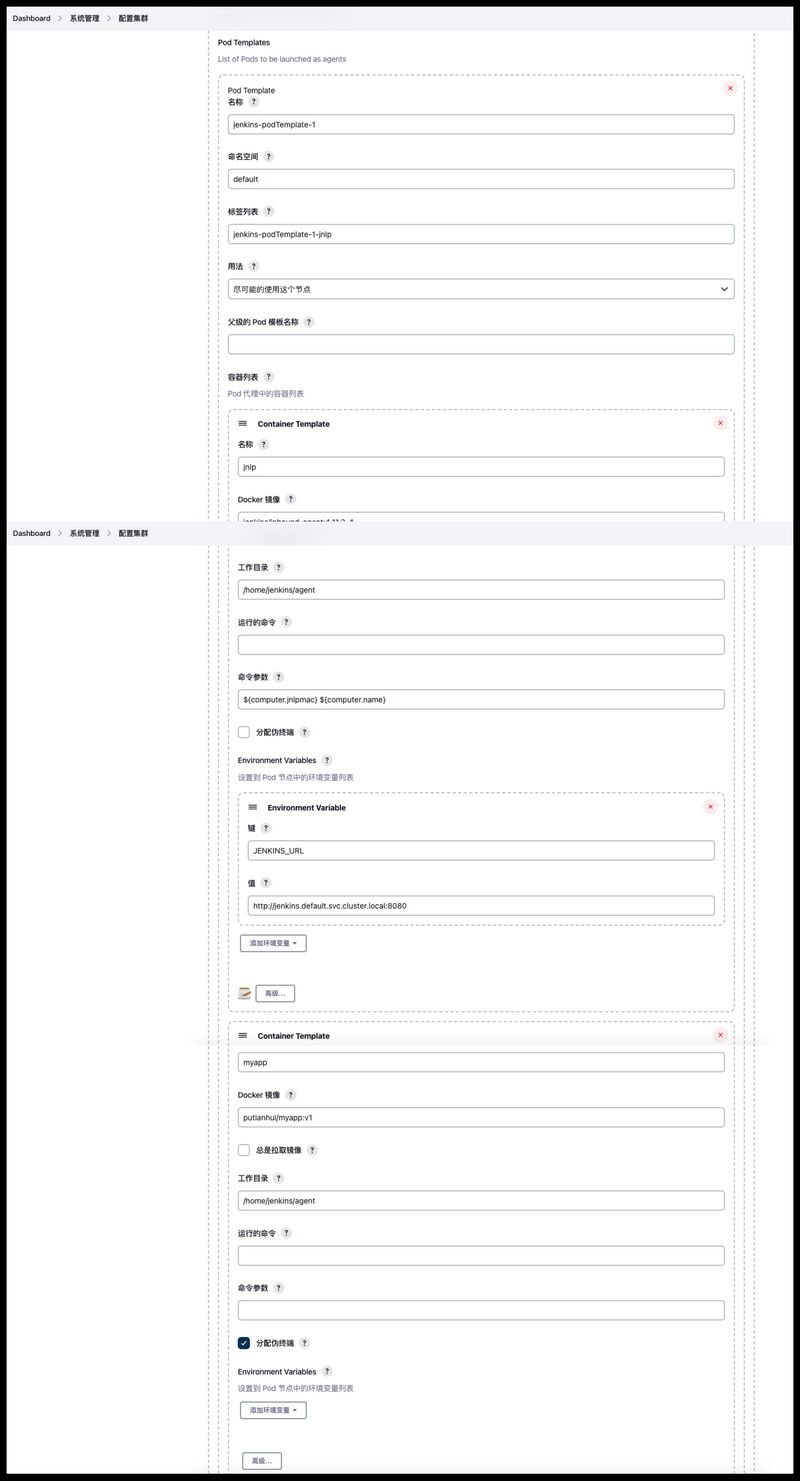

方式二:通过系统管理–配置集群–Pod Template中配置pod模板jenkins-podTemplate-1,里面有两个容器,一个为Jenkins-agent容器用于连接Jenkins-master,另外一个为测试用的容器。

注意:jnlp容器运行的命令默认貌似是sleep,记得为空,不然会覆盖容器的执行命令,导致jnlp容器无法连接到Jenkins-master,从而导致无法执行任务。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 pipeline { agent { label 'jenkins-podTemplate-1-jnlp' } stages { stage ('git获取代码' ) { steps { container('jnlp' ) { sh """ echo "git checkout" touch git.txt df -hT """ } } } stage ('mvn编译' ) { steps { container('myapp' ) { sh """ ls -l """ } } } stage ('restart-Myapp' ) { steps { container('myapp' ) { sh """ echo "myapp" df -hT """ } } } } }

脚本式模板 方式一:将Pod Template模板定义在Jenkins的Pipeline中

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 podTemplate(name: 'jenkins-slave' , cloud: 'kubernetes' , namespace: 'default' , label: 'jenkins-slave' ,serviceAccount: 'default' , containers: [ containerTemplate( name: 'jnlp' , image: 'jenkins/inbound-agent:4.11.2-4' , args: '${computer.jnlpmac} ${computer.name}' , ttyEnabled: true , privileged: true , alwaysPullImage: false , ), containerTemplate( name: 'myapp' , image: 'putianhui/myapp:v1' , ttyEnabled: true , privileged: true , alwaysPullImage: false , ) ], volumes: [ persistentVolumeClaim(mountPath: '/tmp/' , claimName: 'jumpserver-jms-web-logs' ) ] ) { node('jenkins-slave' ) { stage('git-checkout' ) { container('jnlp' ) { sh """ echo "git checkout" echo "123456" > ~/git.txt """ } } stage('mvn-package' ) { container('jnlp' ) { sh """ echo "mvn --- $HOSTNAME" """ } } stage('restart-Myapp' ) { container('myapp' ) { sh """ echo "myapp --- $HOSTNAME" """ } } } }

方式二:直接使用系统管理中创建的Pod Template模板,根据创建时指定的模板标签去匹配。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 podTemplate{ node('jenkins-podTemplate-1-jnlp' ) { stage('git-checkout' ) { container('jnlp' ) { sh """ echo "git checkout" echo "123456" > ~/git.txt """ } } stage('mvn-package' ) { container('jnlp' ) { sh """ echo "mvn --- $HOSTNAME" """ } } stage('restart-Myapp' ) { container('myapp' ) { sh """ echo "myapp --- $HOSTNAME" cat ~/git.txt """ } } } }

制作podTemplate中用的工具镜像 安装的工具:docker、crictl、kubectl、helm、git、net-tools

创建docker镜像目录并下载常用的命令、生成客户端的配置文件到当前目录下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 $ mkdir tools-image && cd tools-image/ $ wget https://get.helm.sh/helm-v3.11.0-linux-amd64.tar.gz && tar xzvf helm-v3.11.0-linux-amd64.tar.gz && mv linux-amd64/helm ./ $ curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt) /bin/linux/amd64/kubectl" && chmod +x kubectl $ wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.26.0/crictl-v1.26.0-linux-amd64.tar.gz && tar xzvf crictl-v1.26.0-linux-amd64.tar.gz $ cp /usr/bin/docker . $ cat > ./crictl.yaml <<EOF runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock timeout: 2 debug: false pull-image-on-create: true EOF

新建Dockerfile文件内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 $ cat >Dockerfile<<EOF FROM ubuntu:20.04 ENV DEBIAN_FRONTEND=noninteractiveENV LANG en_US.UTF-8 ENV LANGUAGE en_US:enENV LC_ALL en_US.UTF-8 USER rootCOPY ./crictl ./kubectl ./helm ./docker /usr/bin/ COPY ./crictl.yaml /etc/crictl.yaml RUN set -x; apt-get update \ && apt-get install -y sudo curl vim git net-tools tzdata \ && ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime \ && rm -rf /var/lib/apt/lists/* CMD ["sleep" , "9999999" ] EOF

构建工具镜像

1 2 $ docker build -t putianhui/jenkins-tools:v1 .

镜像在使用时需要注意一下几点:

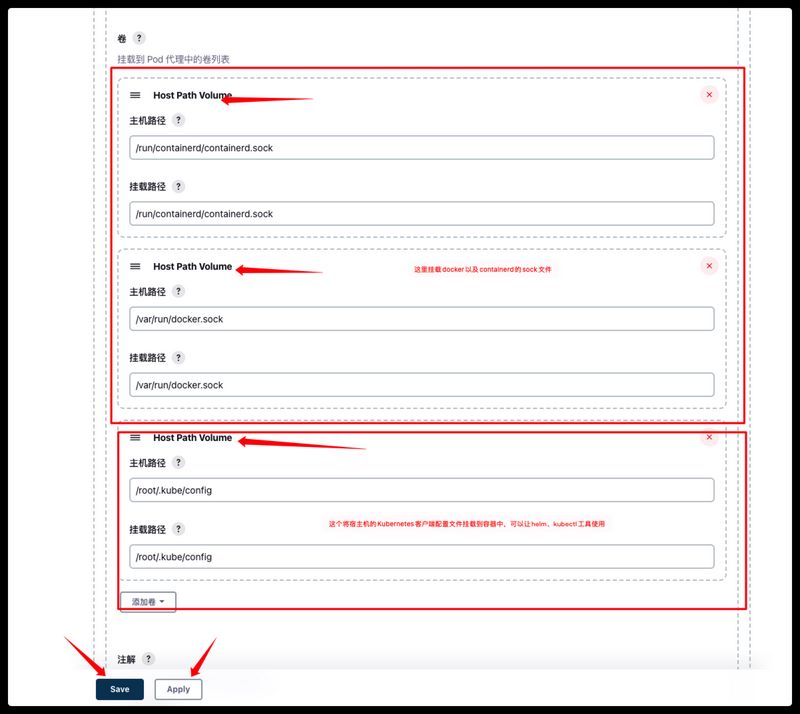

1、在使用helm、kubectl工具时,需要将Kubernetes的客户端配置文件挂载到容器的/root/.kube/config文件

2、在使用crictl工具时,需要将containerd的sock文件挂载到容器的/run/containerd/containerd.sock文件

3、在使用docker工具时,需要将docker的sock文件挂载到容器的/var/run/docker.sock

Pod模板多容器构建Pipeline测试

每次构建任务会在Kubernetes集群中启动一个Jenkins-agent的pod来运行这个构建任务。

在系统管理–节点管理中配置一个通用的pod模板,里面包含两个容器,一个是Jenkins-jnlp的容器,一个是Jenkins-tools的容器。

在对应项目的Jenkinsfile中,指定对应语言构建的容器去构建,然后把通用的pod模板通过继承的方式加载进来,这样的话在pipeline中代码编译步骤中就使用对应语言的容器去构建制品,docker镜像的打包或者helm部署时就用工具容器去执行操作。

第一步:我们先在系统管理–节点管理中添加一个pod-template,这个模板中共有两个容器,一个是Jenkins-jnlp的容器,一个是Jenkins-tools容器(刚刚构建的镜像)。

由于工具容器中使用了docker、crictl我们需要把这俩服务的sock文件挂载到容器的对应位置,还使用了helm、kubectl这俩工具我们需要把kubernetes的客户端配置文件~/.kube/config挂载到容器中。

第二步:创建一个job,使用下面的jenkinsFile内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 pipeline { agent { kubernetes { cloud 'kubernetes' namespace 'default' workingDir '/home/jenkins/agent' inheritFrom 'jenkins-podTemplate-1' defaultContainer 'jnlp' yaml """\ apiVersion: v1 kind: Pod metadata: labels: jenkins-jnlp: "true" jenkins/label: "jenkins-slave" spec: containers: - image: "maven:alpine" imagePullPolicy: "IfNotPresent" name: "maven" command: - cat tty: true - image: "golang:1.16.5" imagePullPolicy: "IfNotPresent" name: "golang" command: - sleep args: - 99d """ .stripIndent() } } stages { stage ('查看 jnlp的java版本' ) { steps { container('jnlp' ) { sh """ id;java -version pwd touch "jnlp-version.txt" """ } } } stage ('查看golang版本' ) { steps { container('golang' ) { sh """ go version pwd ls -l touch "golang-version.txt" """ } } } stage ('查看maven版本' ) { steps { container('maven' ) { sh """ id;mvn -version pwd ls -l touch "maven-version.txt" """ } } } stage ('查看tools工具信息' ) { steps { container('tools' ) { sh """ kubectl get pod crictl images #docker images helm list pwd touch "tools-version.txt" ls -l """ } } } } }

上面Jenkinsfile的任务运行时对应的Jenkins-slave的pod会有四个容器,包含通用pod模板的jnlp和tools容器,还有yaml指定的golang和maven构建的语言容器,通过继承的方式可以很方便的组合。

假如我要构建java的应用,那么就可以yaml中继承通用的pod模板,然后yaml中只需要指定maven的构建语言容器即可,构建node应用是同理,继承通用pod模板,然后yaml中只需要去指定nodejs的容器即可。

各个流水线阶段需要构建代码时就指定构建语言的容器、需要打包docker镜像时就用tools容器、需要发布时也还是指定tools容器通过helm发布即可。

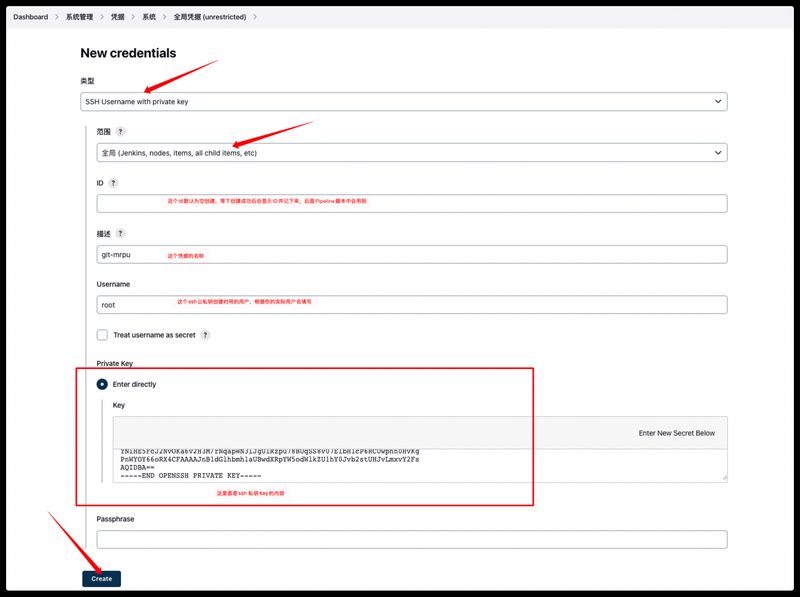

实践验证 1、创建一个ssh公私钥,将公钥上传至gitlab个人中的ssh秘钥中。

2、将ssh私钥的内容拿出来在Jenkins上创建凭据,系统管理—Manage Credentials—System—全局凭据—Add Credentials

3、通过下面的Pipeline脚本构建流水线测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 def git_auth = "c30ba02f-f182-476d-87fe-983bc56969b8" def git_url = "ssh://git@git.mrpu.cn:32022/ops/devops-test.git" env.Deployment="dev" env.jar_name="digital-assetd" env.harbor_host="harbor.mrpu.cn/library" pipeline { agent { kubernetes { cloud 'kubernetes' namespace 'default' workingDir '/home/jenkins/agent' inheritFrom 'jenkins-podTemplate-1' defaultContainer 'jnlp' yaml """\ apiVersion: v1 kind: Pod metadata: labels: jenkins-jnlp: "true" jenkins/label: "jenkins-slave" spec: containers: - image: "maven:alpine" imagePullPolicy: "IfNotPresent" name: "maven" command: - cat tty: true """ .stripIndent() } } parameters { gitParameter name: 'git_branch' , type: 'PT_BRANCH_TAG' , branchFilter: 'origin/(.*)' , defaultValue: 'main' , selectedValue: 'DEFAULT' , sortMode: 'DESCENDING_SMART' , description: '选择一个发布代码的分支' } stages { stage ('1、拉取代码' ) { steps { container('tools' ) { script { checkout([$class: 'GitSCM' , branches: [[name: "${git_branch}" ]], doGenerateSubmoduleConfigurations: false , extensions: [], submoduleCfg: [], userRemoteConfigs: [[credentialsId: "${git_auth}" , url: "${git_url}" ]]]) } } } } stage ('2、maven构建制品' ) { steps { container('maven' ) { sh """ id;mvn -version pwd ls -l source /etc/profile &> /dev/null #rm -rf /root/.m2/repository/* # 构建mvn制品 #mvn clean package -Dmaven.test.skip=true -P ${Deployment} """ } } } stage('3.构建镜像&推送仓库' ) { steps { sh ''' docker_img_name="${harbor_host}/${jar_name}:${BUILD_NUMBER}" echo \$docker_img_name #docker build -t \$docker_img_name . #docker push \$docker_img_name ''' } } stage ('4、Helm部署' ) { steps { container('tools' ) { sh """ kubectl get pod crictl images #docker images helm list pwd touch "tools-version.txt" ls -l git version """ } } } } }

Helm3安装Gitlab 安装Gitlab 添加Gitlab的helm仓库并更新

1 2 $ helm repo add gitlab https://charts.gitlab.io $ helm repo update

新建Gitlab的values.yaml清单,下面的内容是我修改后的。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 $ vim gitlab-values.yaml global: edition: ce application: create: false allowClusterRoles: true shell: port: 32022 hosts: domain: mrpu.cn https: true ssh: git.mrpu.cn gitlab: name: git.mrpu.cn https: true minio: name: minio-git.mrpu.cn registry: name: registry-git.mrpu.cn smartcard: name: smartcard-git.mrpu.cn kas: name: kas-git.mrpu.cn pages: name: pages-git.mrpu.cn ingress: enabled: true apiVersion: 'networking.k8s.io/v1' configureCertmanager: false provider: nginx class: nginx tls: enabled: true secretName: 'mrpu-cn-tls' path: / pathType: Prefix time_zone: Asia/Shanghai upgradeCheck: enabled: false certmanager: installCRDs: false install: false nginx-ingress: enabled: false prometheus: install: false gitlab: gitlab-shell: enabled: true service: type: 'NodePort' nodePort: 32022 gitaly: persistence: enable: true accessMode: 'ReadWriteOnce' size: '100Gi' storageClass: 'nfs-sc' minio: persistence: enable: true accessMode: 'ReadWriteOnce' size: '100Gi' storageClass: 'nfs-sc' redis: install: true cluster: enabled: false master: persistence: enable: true size: '100Gi' storageClass: 'nfs-sc' postgresql: persistence: enable: true size: '100Gi' storageClass: 'nfs-sc'

通过helm安装Gitlab

1 $ helm install gitlab --version 6.8.1 -f ./gitlab-values.yaml gitlab/gitlab

获取root的登录密码

1 2 $ kubectl get secret gitlab-gitlab-initial-root-password -ojsonpath='{.data.password}' | base64 --decode

Helm3安装PrometheusAlert 由于需要使用mysql存储数据,首先需要安装数据库然后创建数据库,服务的pod启动后再把对应sql导入进来

1 2 3 # 创建数据库 $ mysql - uroot - p mysql> CREATE DATABASE prometheusalert CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci;

下载官方helm的安装包

1 2 3 $ wget https://github.com/feiyu563/PrometheusAlert/releases/download/v4.8.2/helm.zip $ unzip helm.zip $ cd helm/prometheusalert

修改helm包中的app.conf配置文件内容,以下展示内容为修改后的内容,其他默认即可,当app.conf配置变更时需要重新修改helm目录下的app.conf配置文件,然后再helm upgrade更新到集群中。更新配置后可能pod需要重建才能识别到。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 $ cd config $ vim app.conf appname = PrometheusAlert login_user=admin login_password=putianhui db_driver=mysql db_host=192.168.1.43 db_port=3306 db_user=root db_password=putianhui db_name=prometheusalert AlertRecord=1 RecordLive=1 open-dingding=0 open-weixin=0 open-feishu=1 fsurl=https://open.feishu.cn/open-apis/bot/hook/xxxxxxxxx

修改helm的values.yaml文件,以下展示的为修改后的内容,其他不展示为默认

1 2 3 4 5 6 7 $ cd ../ && vim values.yaml ingress: enabled: false image: repository: feiyu563/prometheus-alert:v4.8.2 pullPolicy: IfNotPresent

通过helm执行安装到Kubernetes集群中

1 $ helm install prometheus-alert ./

创建ingress清单并应用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 $ vim prometheusalert-ingress.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: prometheusalert-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx rules: - host: prometheusalert.mrpu.cn http: paths: - backend: service: name: prometheus-alert-prometheusalert port: number: 8080 path: / pathType: Prefix tls: - hosts: - prometheusalert.mrpu.cn secretName: mrpu-cn-tls $ kubectl apply -f ./prometheusalert-ingress.yaml

将模板的sql模板文件导入到prometheusalert的mysql数据库中

1 2 3 $ mysql -uroot -p mysql> use prometheusalert; mysql> source /tmp/init.sql

做到此步骤即可通过手动创建的ingress域名去访问到平台了https://prometheusalert.mrpu.cn,账号密码为app.conf配置文件中的admin/putianhui

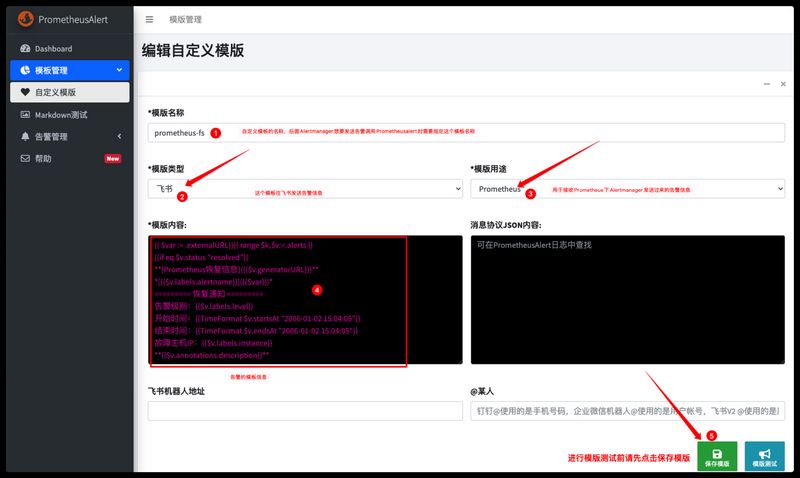

为了后面我们Kube-Promethues的Alertmanager对接PrometheusAlert平台作为告警中心,我们需要新建往飞书发送Prometheus告警信息的自定义模板。

自定义模板内容如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 {{ $var := .externalURL}}{{ range $k,$v:=.alerts }} {{if eq $v.status "resolved" }}**[Prometheus恢复信息]({{$v.generatorURL}})** **======== 💚 告警恢复 💚 ========** 🙋 告警名称: [{{$v.labels.alertname}}]({{$v.generatorURL}}) 🛎️ 告警级别:{{$v.labels.severity}} ⏱️ 开始时间:{{TimeFormat $v.startsAt "2006-01-02 15:04:05" }} ⏱️ 结束时间:{{TimeFormat $v.endsAt "2006-01-02 15:04:05" }} 📋 NameSpace: {{$v.labels.namespace}} 🏷️ Job名称: {{$v.labels.job}} 👀 故障实例:{{$v.labels.instance}} 🚀 Pod名称: {{$v.labels.pod}} 🌕 Svc名称: {{$v.labels.service}} {{else }}**[Prometheus告警信息]({{$v.generatorURL}})** **======== 💔 故障告警 💔 ========** 🙋 告警名称: [{{$v.labels.alertname}}]({{$v.generatorURL}}) 🛎️ 告警级别:{{$v.labels.severity}} ⏱️ 开始时间:{{TimeFormat $v.startsAt "2006-01-02 15:04:05" }} {{if eq $v.endsAt "0001-01-01T00:00:00Z" }}⏱️ 结束时间: {{ printf "告警仍然存在!" }} {{else }}⏱️ 结束时间:{{TimeFormat $v.endsAt "2006-01-02 15:04:05" }}{{end}}📋 NameSpace: {{$v.labels.namespace}} 🏷️ Job名称: {{$v.labels.job}} 👀 故障实例:{{$v.labels.instance}} 🚀 Pod名称: {{$v.labels.pod}} 🌕 Svc名称: {{$v.labels.service}} {{ $urimsg:="" }}{{ range $key,$value:=$v.labels}}{{$urimsg = print $urimsg $key "=\"" $value "\"," }}{{end}}{{$data:=urlquery $urimsg }}[【点我屏蔽该告警】]({{$var }}/#/silences/new ?filter=%7 B{{SplitString $data 0 -3 }}%7 D) 📝 **Alert Detail**: **{{$v.annotations.description}}** {{end}}{{ end }}

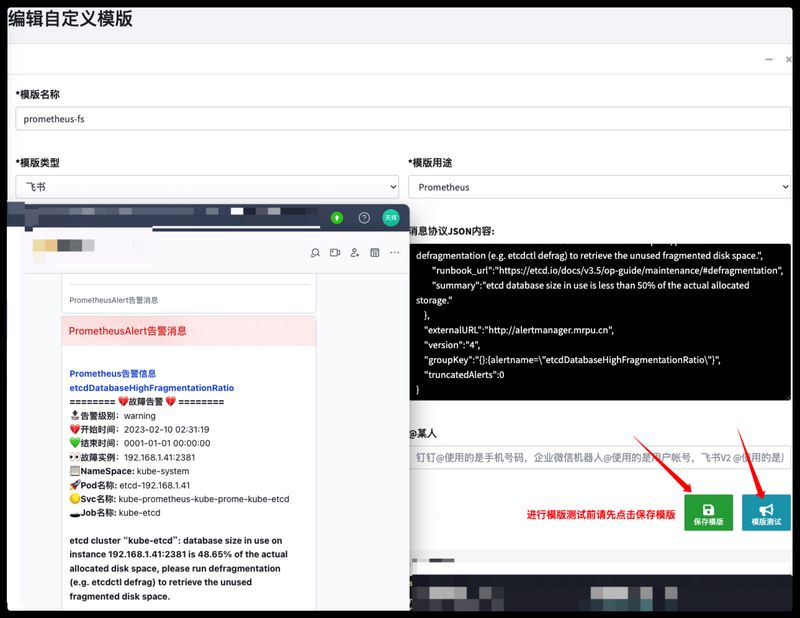

测试模版是否正常,使用下面的测试Json数据填写到消息协议JSON内容中,点击模板测试验证飞书是否收到格式化的告警消息。

1 { "receiver" : "default-alert" , "status" : "firing" , "alerts" : [ { "status" : "firing" , "labels" : { "alertname" : "etcdDatabaseHighFragmentationRatio" , "endpoint" : "http-metrics" , "instance" : "192.168.1.41:2381" , "job" : "kube-etcd" , "namespace" : "kube-system" , "pod" : "etcd-192.168.1.41" , "prometheus" : "default/kube-prometheus-kube-prome-prometheus" , "service" : "kube-prometheus-kube-prome-kube-etcd" , "severity" : "warning" } , "annotations" : { "description" : "etcd cluster \"kube-etcd\": database size in use on instance 192.168.1.41:2381 is 48.65% of the actual allocated disk space, please run defragmentation (e.g. etcdctl defrag) to retrieve the unused fragmented disk space." , "runbook_url" : "https://etcd.io/docs/v3.5/op-guide/maintenance/#defragmentation" , "summary" : "etcd database size in use is less than 50% of the actual allocated storage." } , "startsAt" : "2023-02-10T02:31:19.067Z" , "endsAt" : "0001-01-01T00:00:00Z" , "generatorURL" : "http://prometheus.mrpu.cn/graph?g0.expr=%28last_over_time%28etcd_mvcc_db_total_size_in_use_in_bytes%5B5m%5D%29+%2F+last_over_time%28etcd_mvcc_db_total_size_in_bytes%5B5m%5D%29%29+%3C+0.5\u0026g0.tab=1" , "fingerprint" : "ef8e3b0c3c4071e2" } ] , "groupLabels" : { "alertname" : "etcdDatabaseHighFragmentationRatio" } , "commonLabels" : { "alertname" : "etcdDatabaseHighFragmentationRatio" , "endpoint" : "http-metrics" , "instance" : "192.168.1.41:2381" , "job" : "kube-etcd" , "namespace" : "kube-system" , "pod" : "etcd-192.168.1.41" , "prometheus" : "default/kube-prometheus-kube-prome-prometheus" , "service" : "kube-prometheus-kube-prome-kube-etcd" , "severity" : "warning" } , "commonAnnotations" : { "description" : "etcd cluster \"kube-etcd\": database size in use on instance 192.168.1.41:2381 is 48.65% of the actual allocated disk space, please run defragmentation (e.g. etcdctl defrag) to retrieve the unused fragmented disk space." , "runbook_url" : "https://etcd.io/docs/v3.5/op-guide/maintenance/#defragmentation" , "summary" : "etcd database size in use is less than 50% of the actual allocated storage." } , "externalURL" : "http://alertmanager.mrpu.cn" , "version" : "4" , "groupKey" : "{}:{alertname=\"etcdDatabaseHighFragmentationRatio\"}" , "truncatedAlerts" : 0 }

找到我们刚刚添加的自定义模板webhook通知地址,记录下来等下在alertmanager里面添加webhook_configs时要用到。

Helm3安装kube-Promethues 安装kube-Promethues 通过Helm去安装prometheus-operator ,各个组件的兼容性在这里查看 ,详细的helm安装指南在官方这里 可以找到。

添加helm仓库并更新

1 2 $ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts $ helm repo update

新建kube-promethues-values.yaml的values.yaml清单

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 $ vim kube-promethues-values.yaml alertmanager: enabled: true config: global: resolve_timeout: 5m route: group_by: ['alertname' ] group_wait: 30s group_interval: 1m repeat_interval: 5m receiver: 'default-alert' routes: - receiver: 'null' matchers: - alertname =~ "InfoInhibitor|Watchdog" receivers: - name: 'null' - name: 'default-alert' webhook_configs: - url: 'http://prometheus-alert-prometheusalert.default:8080/prometheusalert?type=fs&tpl=prometheus-fs' templates: - '/etc/alertmanager/config/*.tmpl' ingress: enabled: false alertmanagerSpec: image: registry: quay.io repository: prometheus/alertmanager tag: v0.25.0 sha: '' externalUrl: 'http://alertmanager.mrpu.cn' storage: volumeClaimTemplate: spec: storageClassName: nfs-sc accessModes: ['ReadWriteOnce' ] resources: requests: storage: 100Gi prometheus: enabled: true ingress: enabled: false prometheusSpec: image: registry: quay.io repository: prometheus/prometheus tag: v2.41.0 sha: '' externalUrl: 'http://prometheus.mrpu.cn' storageSpec: volumeClaimTemplate: spec: storageClassName: nfs-sc accessModes: ['ReadWriteOnce' ] resources: requests: storage: 100Gi grafana: enabled: true defaultDashboardsTimezone: 'Asia/Shanghai' adminPassword: 'putianhui' ingress: enabled: true ingressClassName: nginx hosts: - grafana.mrpu.cn tls: - secretName: mrpu-cn-tls hosts: - grafana.mrpu.cn persistence: type: pvc enabled: true storageClassName: nfs-sc accessModes: - ReadWriteOnce size: 100Gi prometheusOperator: enabled: true admissionWebhooks: enabled: true patch: enabled: true image: registry: docker.io repository: putianhui/ingress-nginx-kube-webhook-certgen tag: v1.3.0

通过helm安装

1 $ helm install kube-prometheus --version 44.4.1 -f ./kube-prometheus-values.yaml prometheus-community/kube-prometheus-stack

创建alertmanager和Prometheus的ingress,因为前面清单中我们只开启了Grafana的ingress自动创建,另外两个需要我们手动创建。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 $ vim alertmanager-prometheus-ingress.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: alertmanager-prometheus-ingress annotations: nginx.ingress.kubernetes.io/rewrite-target: / spec: ingressClassName: nginx rules: - host: alertmanager.mrpu.cn http: paths: - backend: service: name: kube-prometheus-kube-prome-alertmanager port: number: 9093 path: / pathType: Prefix - host: prometheus.mrpu.cn http: paths: - backend: service: name: kube-prometheus-kube-prome-prometheus port: number: 9090 path: / pathType: Prefix tls: - hosts: - alertmanager.mrpu.cn - prometheus.mrpu.cn secretName: mrpu-cn-tls

创建并应用ingress规则

1 $ kubectl apply -f ./alertmanager-prometheus-ingress.yaml

安装成功后即可通过ingress域名访问到Prometheus、Grafana、Alertmanager服务,Grafana的密码为部署清单中设置的adminPassword值

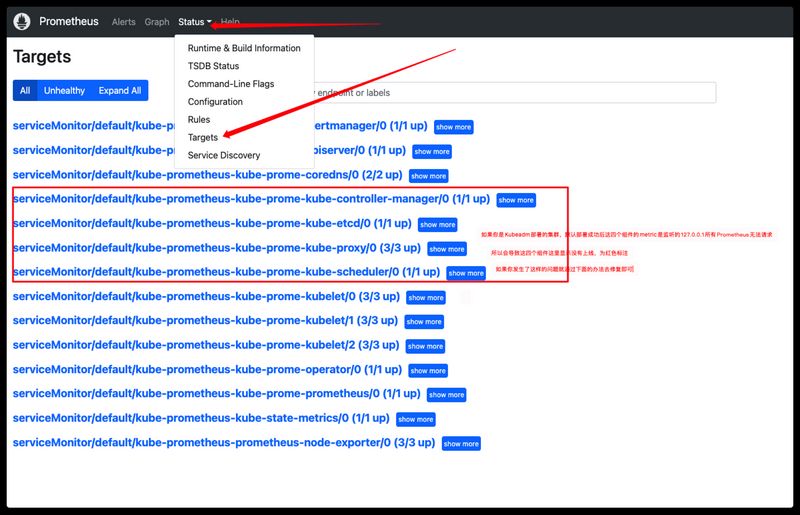

修复Prometheus中部分无法没上线的问题 修复etcd、controller-manager、scheduler这三个组件无法Prometheus无法上线问题,在master节点执行以下操作

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 $ cd /etc/kubernetes/manifests $ vim etcd.yaml --listen-metrics-urls=http://0.0.0.0:2381 $ vim kube-controller-manager.yaml --bind-address=0.0.0.0 $ vim kube-scheduler.yaml --bind-address=0.0.0.0 $ systemctl restart kubelet

修改kube-proxy组件服务在Prometheus无法上线问题,由于该组件是以daemonset资源部署的,所有直接修改集群中的cm配置后重启下pod即可。

1 2 3 4 5 6 7 8 9 10 11 12 $ kubectl edit cm/kube-proxy -n kube-system apiVersion: v1 data: config.conf: |- apiVersion: kubeproxy.config.k8s.io/v1alpha1 metricsBindAddress: "0.0.0.0" ······省略 $ kubectl delete pod -l k8s-app=kube-proxy -n kube-system

对接PrometheusAlert发送飞书告警 PrometheusAlert告警平台我们前面已经部署好了,现在修改Kube-Prometheus的helm下自定义values.yaml文件,在Alertmanager配置项下添加PrometheusAlert的告警webhook_configs配置。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 $ vim kube-promethues-values.yaml .... 省略 .... alertmanager: enabled: true config: global: resolve_timeout: 5m route: group_by: ['alertname' ] group_wait: 30s group_interval: 1m repeat_interval: 5m receiver: 'default-alert' routes: - receiver: 'null' matchers: - alertname =~ "InfoInhibitor|Watchdog" receivers: - name: 'null' - name: 'default-alert' webhook_configs: - url: 'http://prometheus-alert-prometheusalert.default:8080/prometheusalert?type=fs&tpl=prometheus-fs' .... 省略 ....

通过更新后的vaules.yaml文件更新服务

1 $ helm upgrade kube-prometheus --version 44.4.1 -f ./kube-prometheus-values.yaml prometheus-community/kube-prometheus-stack

Prometheus触发一条告警即可在飞书查看到内容。